Ubiquitous Ambient Gaming - Sprint 1 : April 15-29, 2010

From EQUIS Lab Wiki

- Document animal game idea

- Get informal feedback from people about whether they think the game will be fun

- Implement to point where

- players can hear the animals

- when a player walks over an animal, they hear a sound and the animal is "picked up"

- if time permits, have animals move

- Test this first prototype with users to determine fun, usability

Contents |

The game

All the cages of a zoo have been opened and the animals are wandering in the city. The player has to bring back the animals to their cages. A player earns money for each animal that he brings back. Some animals can be caught without any tools, some require tools to be caught. A player can buy tools with the money he has earned. The goal is to earn as much as possible. There are 4 types of animals : three that can be caught and brought back into their cages, and tigers that can’t be caught and can eat the animals.

The rules are :

- To catch an animal, a player has to get close to the animal’s location.

- If a player gets too close to a tiger, the tiger will eat the animals he had collected.

- To get money, a player has to bring back the animals to their cages, the cages are at different locations according to the type of animal.

- A player can only hear and see the tigers and the animals he can catch.

- A player can buy tools at the cage locations.

- The animals that need more expensive tools to be caught are worth more.

Evaluation

An informal evaluation on the fun of the game has been done. Several persons read the rules of the game and gave us feedback on whether they thought this game would be fun. They were asked to give their opinion on the game, what they liked, what they disliked, what they would change.

Results

This evaluation has been presented to 3 persons. This is a summary of what seemed the most important.

- Changes proposed :

- Ability to see / hear the animals the player can’t catch to remember him of their existence (the player would have a global view of the state of the game) and to push him to buy tools to catch them.

- Things to precise :

- How does a player match a cage with a type of animal?

- How does a player find a cage’s location?

- We could be confronted to a “How weird I look” factor : people would be concerned about what the people in the street might think of them running around, stopping, going the other way. This might be a brake to the fun of the game.

- Animals chosen : mostly African animals, both users mentioned monkeys and elephants first.

The detailed results of Users' feedback are here : Ubiquitous Ambient Gaming - Evaluation of the game Growl Patrol.

Progress of the code

The user can move whether by GPS or with the 4 arrows. The user is always centered on the screen. Animals move around the map. There are 2 types of animals : cats and dogs. When the user reaches an animal, he catches it. The animal then follows the user around.

.png)

GPS and positioning features

This part was done by using the classes Jason did with his tourist application.

Sound features

- Each animal has a sound (it is the same for animals of the same type).

- When the animal is walking around, it emits a sound :

- The user perceives it according to their relative positions.

- An animal doesn’t make a sound all the time, there are delays between his sound. The delay varies among the animals but it is constant for one animal. The delay is determined randomly.

- When an animal is caught, a sound is made to inform the user of it.

- When an animal follows a user, it doesn’t emit any sound.

- When the animal is walking around, it emits a sound :

- We are confronted to the “front-back confusion”, which means that it is impossible to differentiate a sound coming from the front and a sound coming from the back. We thought of 2 simple methods to improve that :

- passed a certain angle, the user can’t hear a sound coming from the back,

- the same idea but smoother : the sound decreases when the user turns his back to the animal

Other features

- I created a class called MapTexture that allows to control several features concerning the map :

- In order to prevent the animals from running through buildings, we determined areas where the animals can’t go. To do that, we turned the map we are using in black and white (no nuances of grey). The white parts are the ones the animals can go, the black parts are the forbidden areas.

- The size of the play area and the place of the play area can be modified ( as long as it stays inside the map)

.png)

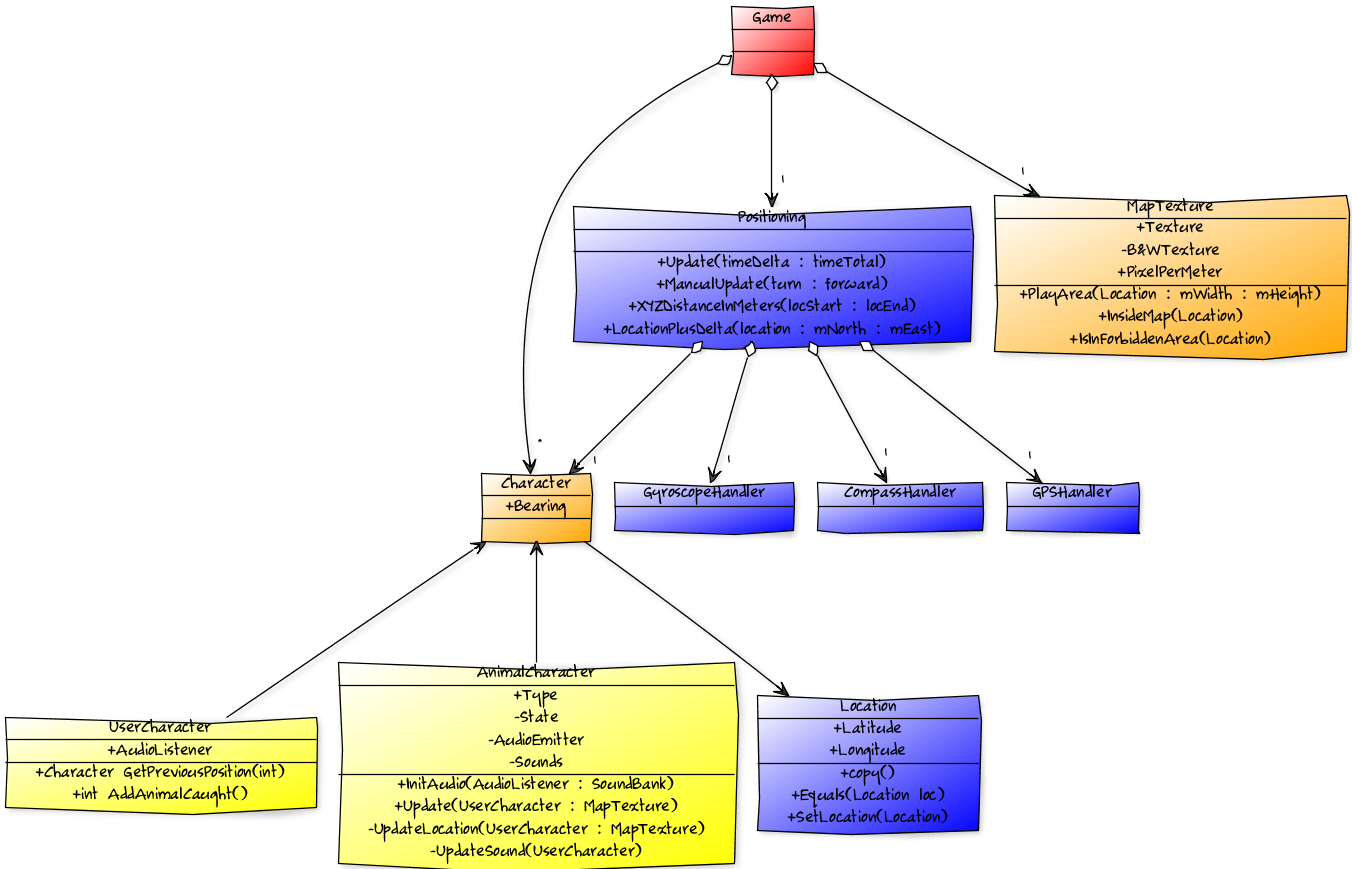

Architecture

Evaluation

A first test was done on the spatial orientation thanks to the ambient audio. I also compared the 2 ways of differentiating the back and front sounds.

The users were asked to catch 2 animals among 4 without looking at the screen. They were asked to talk out loud so that I could collect information on their perception of the sounds.

The users played twice, each time with a different way of differentiating the front and back sounds. They didn’t know what difference there was between the two tests.

Parameters

- Four animals (2 cats and 2 dogs) are moving in an area of 400m x 400m.

- The player is placed in the middle of the area.

- The player can move thanks to the 4 arrows :

- left / right : the user stands still but turn around himself, one degree at a time

- up / down : the user moves forward / backward of 1m following his bearing

- Two ways of differentiate the back and the front were tested in a different order :

- the sound stops when the animal passes a certain angle behind the bearing of the user : 120 < teta < 240

- the sound fades progressively (linearly) when the angle between the animal and the player’s bearing gets close to 180 degrees : 110 < teta < 180 and 250 > teta > 180

- The animals don’t make a sound all the time, there are delays between their sound. The delay varies among the animals but it is constant for one animal. For the test, the maximum delay is 2s.

Results

- Way to differentiate front and back :

- Two users preferred the progressing fading and the other preferred the abrupt stopping of the sound. The abrupt stopping of the sound does not match the intuitive perception (if a sound stops, it means that the animal disappeared), but neither does the progressing fade (it is assimilated to the animal moving away). In each case, the user chose by default, because he didn’t like the other way, not because he liked the way he chose.

- They all felt several times that the sound was coming from behind when in fact it was coming from the front.

- It seems that either way, it would need something else to make the difference between front and back more natural.

- One of the users, said he would have preferred that there is no difference between front and back, and he would have determined by moving around from where the sound was coming (it is to notice that this user used a lot more the forward/backward than the turn arrows).

- As for the sound orientation :

- The users felt the difference between left/right and the variation of volume.

- They also established the link between the variation of volume and the distance, but they both felt it wasn’t completely natural and they sometimes would not know exactly how close they were from an animal, especially when they were close to it. It seems that the variation of volume, especially for something close, must be noticeable and recognizable.

- Remarks on the sounds of the animals :

- A big delay between 2 sounds emitted by an animal forces the player to stop and wait for the animal to make a sound again, which can be good for the game-play but might encourage the player to use the screen instead of the sound (a delay of 2s seems too important).

- Having always the same delay for an animal, or even the same sound, is not a natural way, and it can get annoying for the player.

- Moreover, when two animals of a same type are emitting a sound, even if they are not doing it at the same time, it can be hard for a user to focus on only one animal, he can get mixed up. It seems that, when the animals have the same sound, the user can’t apply the “cocktail effect” which allows him to focus on one sound among a lot of sounds.

=> The results tend to show that a player would learn very quickly how to use efficiently the sound to orientate himself (both users moved quicker the second time and with equal or more accuracy). Moreover, the players were pleased to discover that they could catch an animal using only the sound orientation.

The detailed results of Users' evaluation are here : Ubiquitous Ambient Gaming - First evaluation of sound orientation and differentiation front/back.