Ubiquitous Ambient Gaming - Domain analysis

From EQUIS Lab Wiki

Contents |

Ambient Audio

Definitions

Ambient audio is a specific form of ambient display. Therefore, it seems important to define what are ambient displays.

Ambient displays

According to the Oxford English Dictionary, Ambient means “surrounding, encircling, encompassing, and environing”. Therefore, ambient displays are displays that use the surrounding of the user to transmit information, whether it is visually, with audio or using any other sense [9]. The idea behind this is that we use all our senses all the time to gather information on our environment, and we mostly do it subconsciously without attending to it explicitly. However, current computing interfaces largely ignore these rich ambient spaces, and resign to focusing vast amounts of digital information on small rectangular windows. With ambient displays, it is possible to spread these information all over our 5 senses, and make a better use of human capacities.

Ambient displays are perfectly suited for delivering information to the user at a peripheral level [2][9], making him aware of the information without being overburdened by it. Indeed, this kind of displays allow the information to be given to the user without necessarily distracting him from his primary task, the information being displayed in such a way that it requires a minimal attention and cognitive effort and the user does not need to focus on it. The user can acquire the information without ever bringing it into the foreground of attention, or can choose to focus on the information whenever he wants.

Moreover, when designing an ambient display, the mapping of information sources must be thought carefully [9]. The background media should not interfere with the user’s main task. For example, if the person is in a task of writing on the computer, a visually based ambient display behind the person will not be as effective as an audio display. However, the modality and spatial configuration of an ambient display should not be altered during the use since it might confuse the user.

An example of research that have been done on ambient displays is the AmbientROOM developed by Wineski, Ishii and Dahley [9]. This prototype is based on a mini-office installation, in which several ambient displays have been installed such as ambient light, sound, airflow, and physical motion. This prototype “puts the user inside the computer” and focuses on ambient ways to provide personal and work information to the user.

// pictures

On the ceiling the user can see water ripples that indicate the activity of a hamster left at home, on a wall next to the user is a pattern of illuminated patches that represent the level of human activity in a work area, and whenever the digital whiteboard in a group space is used the person in the personal office hears sounds of dry-erase pens.

Summary: * can use any of the 5 sens * is in the user’s periphery * doesn’t require the user’s full attention

Ambient audio

As said previously, ambient audio is a particular ambient display that uses audio as conveyor of information. Audio is a very natural way of providing information, since we already use it permanently at a subconscious level [1][10]. Footsteps in the corridor tell us that somebody is passing, the sound of our computer tells us its state. We subconsciously absorb this information without having to stop our main task. All these sounds are speechless and occur at a peripheral level, they usually don’t relate to our primary task but can still be processed easily without distracting us from our focus.

Moreover, a lot of tasks we do need a visual focus. When working at a desk, we read papers or work on the screen of a computer, a lot of manual works are demanding in precision and therefore in visual attention, when taking care of somebody (an elder or a child) we need to visually monitor them, and even walking out in the street uses all our visual attention. Eventually, in everyday life our visual attention is constantly solicited, therefore audio seems a good way of providing information without being overburdened by it.

Furthermore, sounds, and especially musical ones, are already desirable components of many human environments [1], whether they are public or private. It blends perfectly with the background, whether the person is working, driving, chatting. It moves easily from the background to the foreground without necessitating any effort from the user.

Summary: * a type of ambient display * speechless * sounds are already used all the time, and it is easy to process the information in the background * our visual attention is constantly solicited * music is desirable in our life

Uses and tools

Ambient audio can be used to provide several types of information. In most cases, one or several sounds will be associated to an object and give information regarding this object. The object can be anything, whether it is real or virtual, concrete or abstract. For example, a real object can be a person, a group of persons, an animal, and a virtual object can be a task (like typing a report) or the stock exchange price. A concrete object can be a white board, when an abstract object can be the mood of a person.

When using ambient sound in an interface, one must think of two characteristics: the identification of the source and of the information conveyed. An ambient sound must convey efficiently (rapidly and with a maximum accuracy) these characteristics to a listener. Both of these characteristics must be abstracted, possibly modifying or loosing part of the information in the process. Therefore, when using ambient audio, one must juggle between abstractness of the sound and immediateness of reading [3].

In order to use ambient audio in it’s best way, several tools are available.

- First, the choice of the sound(s) : which sounds do I want to use to convey which information, what kind of sound should I use? Do I want the sound to match the object in the reality (if the object is real) or do I want a completely abstract sound that might convey in a better way the information?

- Second, simple variations on the sound can be done, such as a variation of volume, pitch or repetition which can represent a distance, a progress, any states or changes related to the object.

- Moreover, the sound can be spatialized, to make it sound like it is coming from a precise direction [11]. Spatialized ambient audio will be discussed furthermore in the second chapter of the document (link).

- Ambient audio can also use earcons [4], which are audio icons that were invented by Sumikawa. An earcon is a short musical motif constructed to convey a small amount of information, mostly simple concepts or ideas.

- Finally, ambient audio can rely on the “cocktail party” effect [9][14]. Indeed, humans have the ability to selectively move their attention to a particular sound in a busy environment. It is called “cocktail party” effect because it commonly happens in a party when a person can focus on a single conversation in the midst of many other conversations or background noises. Thanks to this ability, a person can be presented several information in the form of ambient audio and still be able to understand them.

Summary

* Uses

* Ambient audio conveys information on a source (real or virtual, concrete or abstract).

* A listener must identify the source and understand the information conveyed.

* Tools

* choice of sound

* simple variations of a sound : volume, pitch, repetition

* spatialization of the sound

* earcons

* cocktail party effect

Ambient audio and mobile devices

The particularity of a mobile device is to be mobile. The user wants to be able to use it at any time, at any place. For mobile applications to be efficient some facts must be considered [5]. For example, the interactions with the real world are more important than the interactions with the application, therefore the user can give limited attention to the application. Moreover, the user’s activities in the real world can demand a high level of attention and can involve the use of several senses such as sight, touch and audio. Furthermore, the interactions of the user with his environment are context-dependent and the level of attention required or the senses used can vary with the environment. Therefore, the user’s interactions with the mobile application are mostly rapid, and driven by the external environment.

Unfortunately, most mobile applications don’t take in account the mobility of the user. These applications are still based on the classic desktop user interfaces. But these interfaces were thought for users that are stationary and can devote all their visual resources to the application. The games on mobile devices, for example, use the player’s visual attention, so the player can’t walk while playing. The GPS applications often couple the visual interface with an audio interface, which allows the user to free his sight and to follow the audio instructions. But if the user’s attention was focused on something else at the time the instruction is said, such as crossing a road or talking to somebody, then he has to wait for the application to re-give the information or he must stop to look at the visual interface.

The use of an interface based on ambient audio for a mobile application provides a way of interacting with the user at a peripheral level. This type of interface allows a mobile user to carry out tasks on the application while his eyes, hands or attention are otherwise engaged. Moreover, the use of non-speech sounds implies that the application is not competing with the voice channel which might be used for other communication tasks.

Ambient audio interfaces have characteristics that are well suited for applications oriented on the mobility of the user, whether it is to serve his mobility or to use it. Indeed, on one hand, the application can provide information necessary for the user’s mobility without getting in the way of this mobility. A GPS application could, for example, associate a sound to a destination and provide variations in the sound to indicate the path to the user. On the other hand, an application such as a game could require the user to be mobile, and the use of ambient audio instead of visual interface would allow the user to be mobile at all time.

Summary:

* Context

* Te user’s mobility requires attention and involves the use of several senses, especially the sight.

* Classical visual-based interfaces are not suited for mobile applications.

* Ambient audio advantages

* It leaves the user’s attention free for navigating.

* It is well-suited for applications oriented on the mobility of the user.

Ambient audio and positioning

One common issue for a user being mobile is his positioning. Indeed, the user often needs to know his position according to a destination, his position according to his surroundings, or simply have information about his surrounding. The positioning is usually given by two components: direction and distance. Ambient audio provides several ways to communicate positioning information, from the natural spatialization of sounds to the development of arbitrary conventions in the modulations of sounds to transmit the information.

Spatialized ambient audio

Humans have the ability to locate a sound when they hear one. For decades, researchers have been studying how our ears and brain work together to allow a localisation of the sound. They made discoveries concerning the link between the modulation of a sound through ears and our ability to locate the sound. These discoveries led to artificial mechanisms to reproduce the modulation of a sound through each ear to trick the brain into thinking that the sound is spatially positioned.

In this part, we briefly describe the mechanisms at stake to locate a sound, then we explain how an application can produce 3D sound artificially.

Perception of spatialized sound by humans

When a human hears a sound, he can usually know from where the sound was emitted. This ability is due to a series of mechanisms linked to the human body[7, 15]. This part briefly explains these mechanisms.

We can observe 8 main cues to help locate sounds in space:

- The Interaural Time Difference (ITD) which is the time difference it takes for a sound to arrive at the two ears.

- The Interaural Level Difference (ILD) which is due to the head blocking some sounds and creating a sort of shadow that reduces the sound level at the ear that is the farthest from the emitter. // image

- The pinna and ear canal response which is all the modifications the pinna and ear canal will do to a sound when it arrives at the ear. These modifications are unique to a person and involve mechanisms of reflection, shadowing, dispersion, diffraction, interference and resonance. // image

- The volume of a sound which decreases with distance. Measurings show that a sound decreases of 6dB per doubling of distance.// quick curb

- The effect of reverberation which is the echoes of a sound due to its reflexion on solid objects. This effect is particularly noticed in closed areas.

- The effect of occlusion which happens when the sound is heard from behind a solid object, such as a wall.

- The head motion which is a key factor to determining a sound position in everyday life.

- The vision which is used to confirm the direction of a sound and bind it to an object.

People use all this cues subconsciously each time they hear a sound. However, it is complicated to reproduce these cues artificially to virtually spatialize a sound.

Artificially spatialized sounds

Some techniques have been developed to artificially position a sound around a listener.

The first cues, Interaural Time Difference, Interaural Level Difference and the pinna and ear canal response, they are treated by Head-Related Transfer Functions (HRTF) [7]. HRTFs attempt to accurately reproduce at each ear of the listener the sound pressure calculated according to the source position. The precision of these HRTFs depend on the measurements and algorithms used to produce them. One issue is that each human is physically unique, and HRTF are working on the modifications done on the sound when it reaches the human body. Therefore, in order to have a precise HRTF, and thus a precise localisation, the HRTF will have to be individualized to each listener. This technique would take too much time to be generalized. Therefore, a lot of research has been put in implementing artificial non-individualized HRTFs that would give a good localization of sounds.

The effects of occlusion and reverberation, along with the vision, depend of the environment in which the sounds will be played. Indeed, in order for these cues to be used, the surroundings of the listener must be known perfectly, whether it is a virtual surrounding like a player moving in the world of the game or a real surrounding like a person using a GPS application. These cues are usually treated in the sound engine of the application.

Another issue when producing an artificially spatialized sound is the front-back confusion. Indeed, with artificial sounds, it has been constated that a listener had difficulties to locate a sound if it was coming from the front or from the back. This problem can be reduced if the user is allowed to move his head.

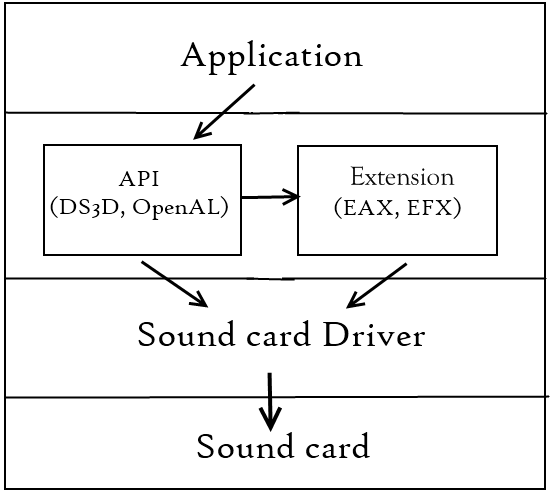

Production of artificially spatialized sound

When an application requires 3D sound, it uses an API (such as DirectSound and OpenAL) that provides a listener and sound sources. Both the listener and the sound sources have editable properties such as their position, direction and velocity. The sound sources are also given sounds. The programmer can modify these properties, along with volume-distance curbs. Then all the calculus is made by the APIs, using HRTFs, to determine the modulation to give to the sound at each eardrum or speaker according to the settings. This provides a good 3D sound.

In order to improve the positioning, the API can use additional sound effects provided by extension APIs (such as A3D, EAX or EFX). These effects are reverberation, reflections, and sound occlusion or obstruction by intervening objects. These extensions provide effects relative to the environment of the listener and sources. They also have the particularity to use the audio drivers to treat the effects, which allow them to take advantage of the hardware-acceleration of the sound card.

A brief history of the main APIs and extensions:

- The most commonly known APIs are DirectSound3D (DS3D, Microsoft product) and OpenAL (an open API that is mainly sustained by Creative Labs).

- As for the extensions, two are widely spread: EAX (Environmental Audio eXtensions) that was used by DirectSound3D and OpenAL, and EFX (EFfects eXtension) which is used by OpenAL. Both these extensions are developped by Creative Labs.

- Until the launch of Vista, DirectSound3D and OpenAL used the EAX extension. But with the arrival of Windows Vista, Microsoft modified its audio software architecture, and no longer provided a direct path to the audio drivers. Therefore, DS3D is no longer able to use the EAX extension.

- OpenAL, however, continued to support EAX extension. But Creative Labs decided to stop the improvement of EAX and to start a new extension, EFX, more adapted to the OpenAL architecture.

Non-spatialized ambient audio and positioning

In the precedent part, we raised some issues concerning a perfect artificial spatialization of sound. Therefore, research have been made in complements or alternatives to spatialization for positioning.

This part presents some examples of these solutions.

Geiger counter and Sonar

A way of using ambient audio for positioning is by applying a Geiger counter metaphor, also known as “hot/cold” metaphor. The source is given a short sound which is emitted regularly. The rapidity with which the sound is repeated gives information on the distance to the source [5].

The “hot/cold” metaphor can also be used to determine the direction of the source. In this case, the rapidity with which the sound is repeated depends on the orientation of the listener according to the source’s position. This idea mixes a sonar metaphor where a source is only spotted when the listener is in the right direction, with a “hot/cold” principle where the listener gets information on his progression of the task, here getting the right direction.

In both cases, the rapidity curb can follow two paths. The classical one is having the rapidity raise when the goal is getting closer. But in long term use, listening to a short sound repeating can become annoying, so instead of having the rapidity raise, an application could lower the rapidity of the sound when the goal is getting closer. This solution is more detailed in 2.2.4. “silence as success”.

Pitch modulation

The pitch of a sound can be modulated to convey information. In a positioning situation, the pitch modulation can represent either the distance or the direction to the source. In their AudioGPS [5], Holland, Morse and Gedenryd use the pitch modulation as an information on the direction of the destination. The destination is provided with two sounds: one is fixed and is the reference to the good direction, the other one sees its pitch change with the direction of the listener. When the listener is in the right direction, the two sounds have the same pitch, but when the user changes direction, the pitch diverges to a maximum of one octave when the listener is in the opposite direction.

Music modulation

Music is a desirable component of our lives, and can influence our moods. A mobile application could use music to represent the surroundings of the listener, or to indicate to the listener a distance or a direction. For example, an application could guide a listener through areas by playing a agreeable music in the right areas and a discordant one in the wrong areas. It could also change the pace of a music to press a listener in going somewhere.

“Silence as success” versus “Silence as no news”

When using an audio interface, we tend to apply the later, “Silence as no news”. With a Geiger counter, as long as there is no radiation, the device will make no noise, but if it gets close to radiations, it will emit short sounds. The functioning of a Geiger counter is logical since it is dealing with danger towards the user, it alerts the user when the danger is present.

For a mobile application however, “Silence as success” may be considered. Indeed, when the listener is on good tracks performing his task, the audio display doesn’t distract him, whereas when the direction is wrong, the listener needs to know it. For a long term usage, where the listener seeks the right position, direction or distance, he might grow tired of a permanent sound, and not use the application.

Summary:

* Positioning : direction and distance

* Ambient audio and positioning:

* Spatialized ambient audio

* Non-spatialized ambient audio:

* Geiger counter and Sonar

* Pitch modulation

* Music modulation

* “Silence as success”